It’s not easy being a Software License Manager.

It’s really not easy being a Software License Manager in a company which uses products from one or more of the “usual suspects” among the major software vendors. Some of the largest have spent recent years creating a licensing puzzle of staggering complexity.

There’s an optimistic school of thought which supposes that the next big change in the software industry – a shift to service-oriented, cloud-based software delivery – will make this particular challenge go away. But how true is this? To answer the question, we need to take a look back, and understand how we arrived at the current problem.

In short, today’s complexity was driven by the last big industry megatrend: virtualization.

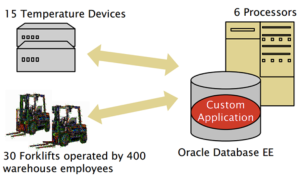

In an old-fashioned datacenter, licensing was pretty straightforward. You’re running our software on a box? License that box, please. Some boxes have got bigger? Okay, count the CPUs, thanks. It was nothing that should have been a big issue for an organized Asset Manager with an effective discovery tool. But as servers started to look a bit less like, well, servers, things changed, and it was a change that became rather dramatic.

The same humming metal boxes were still there in the data center, but the operating system instances they were supporting had become much more difficult to pin down. Software vendors found themselves in a tricky situation, because suddenly there were plenty of options to tweak the infrastructure to deliver the same amount of software at a lower license cost. This, of course, posed a direct threat to revenues.

The license models had to be changed, and quickly. The result was a new set of metrics, based on assessment of the actual capacity delivered, rather than on direct counting of physical components.

In 2006, in line with a ramping-up of the processor core count in its Power5 server offering, IBM announced its new licensing rules. “We want customers to think in terms of ‘processor value units’ instead of cores”, said their spokesman. A key message was simplification, but that was at best debatable: CPUs and cores can be counted, whereas processor-specific unit costs have to be looked-up. And note the timing: This was not something that arrived with the first Power5 servers. It was well into the lifetime of that particular product line. Oh, and by the way, older environments like Power4 were brought into the model, too.

And what about the costs? “This is not a pricing action. We aren’t changing prices”. added the spokesman.

For a vendor, that assertion is important. Changing pricing frameworks is a dangerous game for software companies, even if on paper it looks like a zero-sum game. The consequences of deviating significantly around the current mean can be severe: The customers whose prices rise tend to leave. Those whose prices drop pay you less. Balance isn’t enough – you need to make it smooth for every customer.

Of course, virtualization didn’t stand still from August 2006 onwards, and hence neither did the license models. With customers often using increasingly sophisticated data centers, built on large physical platforms, the actual processing capacity allocated to software might be significantly less than the total capacity of the server farm. You can’t get away with charging for hundreds of processors where software is perhaps running on a handful of VMs.

So once again, those license models needed to change. And, as is typical for revisions like this, sub-capacity licensing was achieved through the addition of more details, and more rules. It was pretty much impossible to make any such change reductive.

This trend has continued: IBM’s Passport Advantage framework, at the time of writing, has an astonishing 46 different scenarios modelled in its processor value unit counting rules, and this number keeps increasing as new virtualization technologies are released. Most aren’t simple to measure: the Asset Manager needs access to a number of detailed facts and statistics. Cores, CPUs, capacity caps, partitioning, the ability of VMs to leap from one physical box to another – all of these and more may be involved in the complex calculations. Simply getting hold of the raw data is a big challenge.

Another problem for the Software Asset Manager is the fact that there is often a significant and annoying lag between the emergence of a new technology, and the revision of software pricing models to fit it. In 2006, Amazon transformed IT infrastructure with their cloud offering. Oracle’s guidelines for applying its licensing rules in that environment only date back to 2008. Until the models are clarified, there’s ambiguity. Afterwards, there are more rules to account for.

(Incidentally, this problem is not limited to server-based software. A literal interpretation of many desktop applications’ EULAs can be quite frightening for companies using widespread thin-client deployment. You might only have one user licensed to work with a specialist tool, but if they can theoretically open it on all 50,000 devices in the company, a bad-tempered auditor might be within their rights to demand 50,000 licenses.)

License models catch up slowly, and they catch up reactively, only when vendors feel the pressure to change them. This highlights another problem: despite the fine efforts of industry bodies like the SAM Standards Working Group, vendors have not found a way to collaborate. As the IBM spokesman put it in that initial announcement: “We can’t tell the other vendors how to do their pricing structure”.

As a result, the problem is not just that these license models are complex. There are also lots of them. Despite fundamentally measuring the same thing, Oracle’s Processor Core Factors are completely different to IBM’s Processor Value Units. Each vendor deals with sub-capacity in its own way, not just in terms of counting rules but even in terms of which virtual systems can be costed on this basis. Running stuff in the cloud? There are still endless uncertainties and ambiguities. Each vendor is playing a constant game of catch-up, and they’re each separately writing their own own rules for the game. And meanwhile, their auditors knock on the door more and more.

Customers, of course, want simplification. But the industry is not delivering it. And the key problem is that pricing challenge. A YouTube video from 2009 shows Microsoft’s Steve Ballmer responding to a customer looking for a simpler set of license models. An edited transcript is as follows:

Questioner:

Particularly in application virtualization and general virtualization, some of Microsoft’s licensing is full of challenging fine print…

…I would appreciate your thoughts on simplifying the licensing applications and the licensing policies.”

Ballmer:

“I don’t anticipate a big round of simplifying our licenses. It turns out every time you simplify something, you get rid of something. And usually what we get rid of, somebody has used to keep their prices down…

…The last round of simplification we did of licensing was six years ago…. it turned out that a lot of the footnotes, a lot of the fine print, a lot of the caveats, were there because somebody had used them to reduce their costs…

…I know we would all like the goal to be simplification, but I think the goal is simplification without price increase. And our shareholders would like it to be a simplification without price decreases”…

…I’d say we succeeded on simplification, and our customer satisfaction numbers plummeted for two and a half years”.

In engineering circles there is a wise saying: “Strong, light, cheap: Pick any two”. The lesson from the last few years in IT is that we can apply a similar mantra to software licensing: Simple, Flexible, Consistently Priced: Pick any two.

Vendors have almost always chosen the latter two.

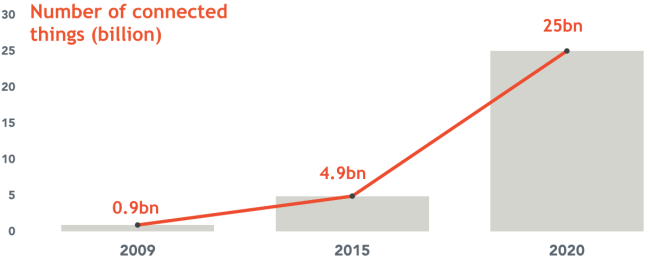

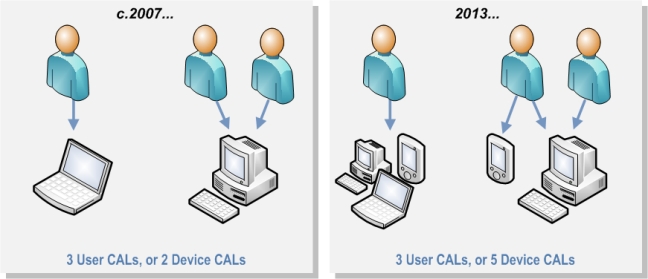

This brings us to the present day, and the next great trend in the industry. According to IDC’s 2011 Software Licensing and Pricing survey, a significant majority of the new commercial applications brought to market in 2012 will be built for the Cloud. Vendors are seeing declining revenues from perpetual license models, while subscription-based revenue increases. Some commentators view this as a trend that will lead to the simplification of software license management. After all, people are easier to count than dynamic infrastructure… right?

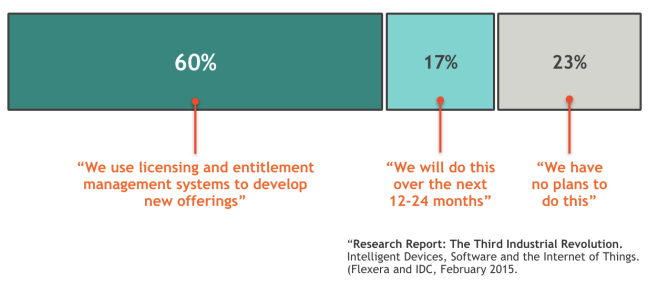

However, for this simplification to occur, the previous pattern has to change, and it’s not showing any sign of doing so. The IDC survey reported that nearly half of the vendors who are imminently moving to usage-based pricing models still had no means to track that usage. But no tracking will mean no revenue, so we know they’ll need to implement something. Once again, the software industry is in an individual and reactive state, rather than a collaborative one, and that will mean different metrics, multiple data collection tools, and a new set of complex challenges for the software asset manager.

And usage based pricing is no guarantee of simplicity. A glance at the Platform-as-as-Service sector illustrates this problem neatly. Microsoft’s Azure, announced in 2009 and launched in 2010, promised new flexibility and scalability… and simplicity. But again, flexibility and simplicity don’t seem to be sitting well together.

To work out the price of an Azure service, the Asset Manager needs to understand a huge range of facts, including (but by no means limited to) usage times, usage volumes, and secondary options such as caching (both performance and geographic), messaging and storage. Got all that? Good, because now we have to get to grips with the contractual complications: MSDN subscriptions have to be accounted for, along with the impact of any existing Enterprise Agreements. Microsoft recognized the challenge and provided a handy calculator, only to acknowledge that “you will most likely find that the details of your scenario warrant a more comprehensive solution”. Simplicity, Flexibility, Consistent Pricing: Pick any two.

And, of course, the old models won’t go away either. Even in a service-oriented future, there will still be on-premise IT, particularly amongst the organizations providing those services.

Software vendors have painted themselves into a corner with their license models, and unless they can find a way to break that pattern, we face a real risk that the license management challenge will get even more complex. Entrenched complexity in the on-premise sector will be joined by a new set of challenges in the cloud.

The pattern needs to change. If it doesn’t change, be nice to your Software Asset Manager. They’ll need a coffee.