At DevOps conferences, I’ve observed some very negative sentiment about ITIL and ITSM. In particular, the Change Advisory Board is frequently cited as a symbol of ITSM’s anachronistic bureaucracy. They have a point. Enterprise IT support organisations are seen as slow, siloed, structures built around an outdated three-tier application model.

None of this should be a surprise. The Agile Manifesto, effectively the DevOps movement’s Declaration of Independence, explicitly values individualism over process, and reactiveness over structure. The manifesto is the specific antithesis of the traits seen in that negative perception of ITSM.

ITSM commentary on DevOps, meanwhile, is inconsistent, ranging from outright confusion to sheer overconfidence. The complaints of the DevOps community are frequently acknowledged, but they are often waved away on the basis that ITSM is “just a framework”, and hence it should be perfectly possible to fit Devops within that framework. If that doesn’t work, the framework must have been implemented badly. Again, this is a reasonable point.

But there’s a recurring problem with the debate: it tends to focus primarily on processes: two ITIL processes in particular. ITSM commentators frequently argue that Change Management already supports the notion of automated, pre-approved changes. DevOps “is just mature ITIL Release Management”, stated an opinion piece in the Australian edition of Computerworld (a remarkable assertion, but we’ll come to that later). Some of the more robust sceptics in the DevOps community focus on ITSM’s process silos and their incompatibility with the new agility in software development.

Certainly, the ITSM community has to realise that there is a revolution happening in software production. Here are some statements which are easy to back up with real-world evidence:

- DevOps methodology fundamentally improves some of the inefficiencies of old, waterfall-driven processes.

- Slow, unnecessarily cumbersome processes are expensive in themselves, and they create opportunity costs by stifling innovation.

- Agile, autonomous teams of developers are unleashing creativity and innovation at a new pace.

- By enabling small, point releases, systems are growing in a more resilient way than monolithic releases tended to achieve.

Unarguably, the new methodology is highlighting the shortcomings of the old. Can anyone argue today that a Change Advisory Board made of humans, collating verbal assurances from other humans, is preferable to an effective, fully-automated assurance process, seamlessly integrated with the release pipeline?

We know, then, that DevOps methods dramatically improve the speed and reliability with which technology change can increase business value. But that’s where the arguments on both sides start to wear thin. What is that business value? How do we identify it, measure it, and assure its delivery?

In my experience, there is little mention of the customer at DevOps events. DevOps is seen, correctly, as a new and improved way to drive business value from software development, but the thinking feels very “bottom up”. ITSM commentators seem to have taken the same starting point: drilling into minutiae of process without really considering the value that ITSM should be looking to bring to the new world.

To highlight why a lack of service context is a problem, let’s take the simple example of frontline support. When developers push out an incremental release to a product, customers start to use it. No matter how robust the testing of that release was, some of those customers will encounter issues that need support (not every issue is caused by a bug that was missed in testing, after all).

The Service Desk will of course try to absorb many of those issues at the first step. That is one of its fundamental aims. To do this effectively, it needs to have reasonable situational awareness of what has been changing. It is not optimal for the Service Desk only to become aware of a change when the calls start coming in. Ideally, they should be armed with knowledge of how to deal with those issues.

No matter how effective the first line of support is, some issues will get to the application team. Those issues will vary, as will the level of pain that each is causing. Triage is required, and that is only possible if there a clear understanding of the business and customer perspective.

Facing a queue of two tickets, or ten tickets, or one hundred tickets, the application team has to decide what to do first. This is where things start to unravel for an idealistic, full-stack, “you break it, you fix it” devops team. Which issues are causing business damage? Which are the most time critical? Which can be deferred? How much time should we spend on this stuff at the cost of moving the product forward? This is the stuff that ITSM ought to be able to provide.

Effective Service Management in any industry starts with a fundamental understanding of the customer. Who are they? What makes them successful? What makes them tell other potential customers how great you are? What annoys them? What hurts them? What will trigger them to ask for a refund? What makes them go elsewhere altogether? And, importantly: what is it that we are obligated to provide them with?

An understanding of our service provision is fundamental to creating and delivering innovative solutions, and supporting them once they are there. This is where ITSM can lead, assist, and benefit the people pushing development forward.

The “S” in ITSM stands for “service”, not process. The heavy focus on process in this discussion (particularly two specific process, close to the point of deployment) has been a big mistake by both communities. It is wholly incorrect to state that DevOps is predominantly contained within Release and Change Management. Code does not appear appear spontaneously in a vacuum. A whole set of interconnected events lead to its creation.

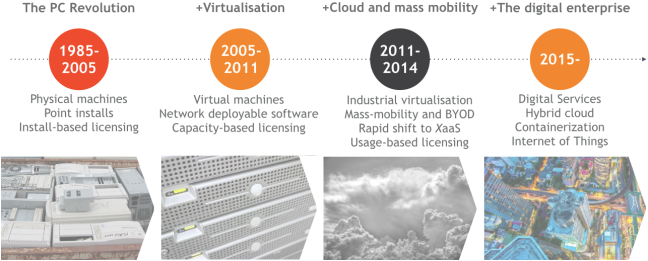

I have been in IT for two decades, and the DevOps movement is by the biggest transformation in software development methodology in that time (I still have the textbooks from my 1990s university Computing course. These twenty-year-old tomes admonish the experimenting “hacker” and urge the systems analyst to complete comprehensive designs before a line of code is written, as if building software was perhaps equivalent to constructing a suspension bridge).

The cultural change brought by DevOps involves the whole technology department… the whole enterprise, in fact. Roles change, expectations change. There are questions about how to align processes, governance and support. We need to think about the structure of our teams in a post three-tier world. We need to consider new support methodologies like swarming. We need to thread knowledge management and collaboration through our organizations in innovative new ways.

But the one thing we really must do is to start with the customer.